JS

Other

By using flag --require ./path/toFile.js for node we can add some file to be called with main file we call

- usage: add some global module that is not required in other files

Node shortcuts

npm run start -> npm start -> npm s? npm install -> npm i npm run test -> npm test -> npm t

JSDoc

add typing to functions with native JS

to use, add comment before function in this format:

/*** @param {number} a* @param {string} b*/Interesting

- if you declare variable inside

<script>tag it will become global

Stop Throwing Errors

JS doesn’t treat errors as values, so we can’t be sure that function won’t throw at runtime without knowing it’s implementation

- this leads to risk of missing try/catch

we can fix it by using explicit return type like this:

type Return = {success: false; error: Error} | {success: true; data: SomeDataType}now we can be sure that function might return error, as well as handle it without try/catch

it is not always best solution, because sometimes it is good to throw error, so some middleware or other catcher will catch and handle it, but this strategy is useful

Data normalizing

Data normalizing - process of transforming arbitrary list of data to list where we have one entity at a time and in other places we have links to this entity

V8

JS engine by google

Compiles js into machine code with features like caching, optimizing, garbage collection etc Used in browsers and Node.js

Code inside try {} is not optimized and usually slower

Regex (Regular Expression)

Regex is used to validate/filter some text data Works by comparing substr inside str

Regex is heavy operation, so better use simple expressions for small data sets

- sometimes better use self written solution

Blob

Blob - binary large object, used in JS as basic protocol for working with binary data

- can be streamed

- there is blob->link conversion API, which is useful in cases, where you need to represent in-memory file via HTML element with src(or download via

a.href)- to force download from JS we can create invisible

acomponent and.click()

- to force download from JS we can create invisible

Service Worker

Service worker - browser api

-

for simplicity we can call it as hybrid of proxy and js(file) between client and browser

-

have ability to cache, work with requests etc

-

async

-

can’t work with DOM directly

-

is some file, that is scoped by page, globaly etc

- if we have global and scoped sw on page only scoped will work

Use Cases

- usually used with listening fetch event

- caching (work with

cachesobj)- can make website work offline

- usually better to init cache on install

Life Cycle

- Register. Triggers every time.

- cases other steps

- Download. Can be seen in network, on registration

- if registered won’t be reregistered later

- Install. Goes directly after download and linked to it

- can be listened as event

- Activation. Indicates that service worker is ready to work

- can be listened as event

- not always instantly triggered after install, so we can

self.skipWaiting()on install stage

- Update. Triggered after changes inside js file, after ~24hours of AFK

- causes downloading, installing and activation again

Other

- if we change request we will see old request and changed response

- active not working for first time, can be fixed with

event.waintUntil(clients.claim())on activate - can’t communicate directly, only with messages

- external lib: work box

Lodash

utility library to work with different data structures in compact and clean way

it is good practice to check if some method is present before writing it

popular methods are(by convention lodash is imported as _):

.sumBy(arr, () => number)// calculate sum from array.get(obj, 'location.to[1].propertie', default)// find some data from nested obj by path.groupBy(arr, () => propertie)// group list of data by some field.deepClone(obj)// return a deep cope of some obj.uniqBy(arr, 'field')// filters array of objects to be unique by some properte.sortBy,.orderBy// same, action is described in name

.isEmpty(arr)// checks if array is empty or even present- native clean way:

arr?.length > 0

- native clean way:

.isEqual(obj1, obj2)// check for deep equality

Requests

Ajax

Tool to reload-less client-server communication

XMLHTTP

Old, native, everywhere supported method to request some data

pros:

- you can track downloading progress, so it is great for file upload related stuff

Fetch

Modern, well supported, native way of doing HTTP requests

Axios

Similar to Fetch, but it is a lib with some additional features, like middlewares

EventLoop - Node.js

Node.js

Node.js - JS runtime build on top of V8 Chrome Engine, with event-driven, non blocking I/O model

I/O - input / output and refers to communication with PC’s CPU

- libuv lib is handling multiplatform async work with I/O for Node.js

Event-Driven model is based on Reactor pattern

- make I/O request(fs.read) -> passed to EventLoop -> passed to Event Demultiplexer -> calls C++ function -> result goes back to Event Demultiplexer -> result is wrapped into event and added to Event Queue -> when call stack is empty our callback is executed with data from event

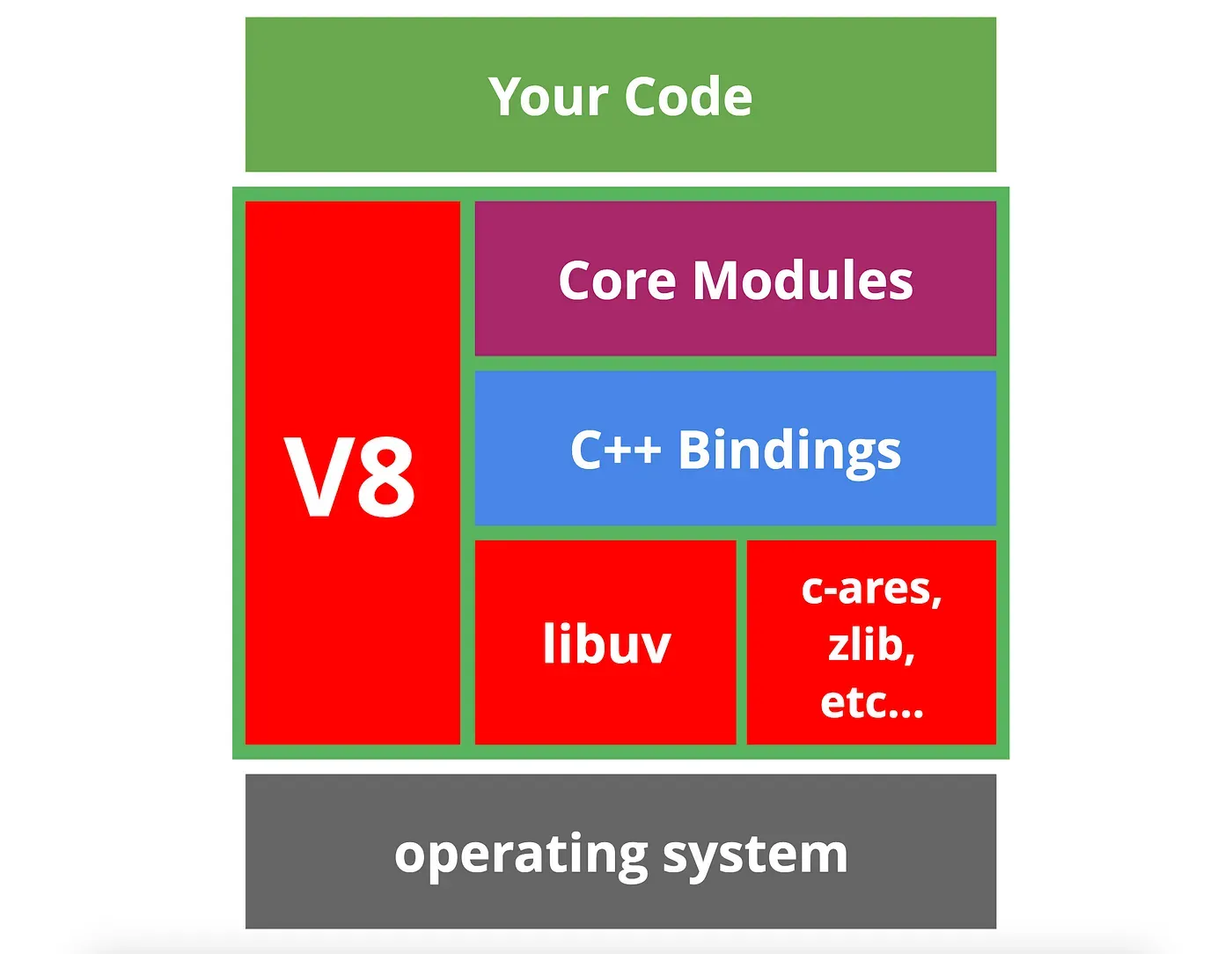

Node.js components

- V8 - engine for parsing and execution of JS

- libuv - provider of EventLoop and I/O operations

- core modules - non standard JS modules like http, fs etc

- c++ bindings - wrapper to require custom compiled C++ modules

- can be generated with Node.js compiler and required like this:

require('./my-cpp-module.node');

- can be generated with Node.js compiler and required like this:

- deps - other small utility libs for low level operations

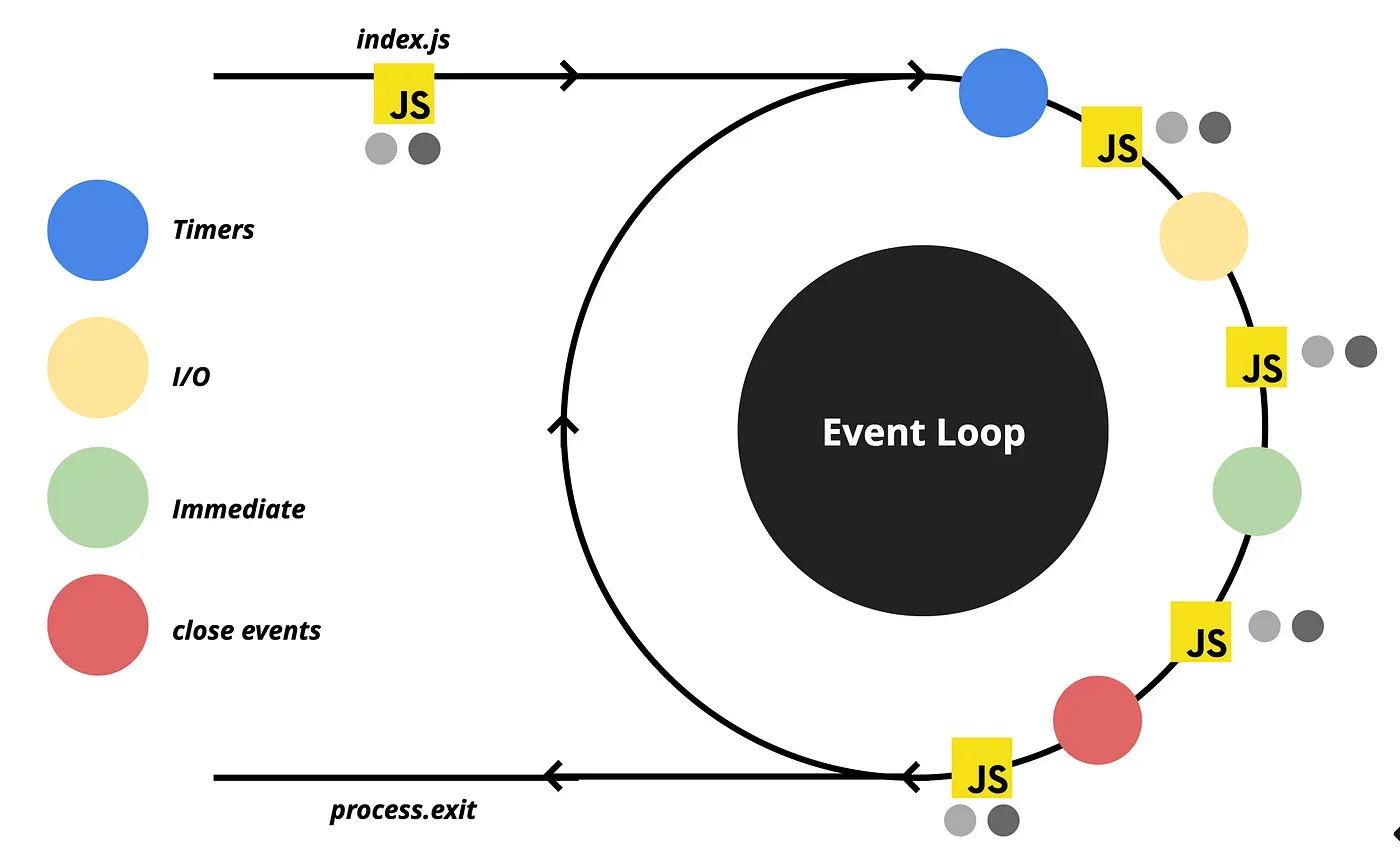

EventQueue - MarkroTasks

EventLoop - mechanism to semi-infinitelly process and handle events

Events are queued into several queues with different priorities and executed after stack is empty

| Queue Name | Description | Priority | Is all events need to be executed |

|---|---|---|---|

| Timer | queue for setTimeout, setInterval | 1 | + |

| I/O | most of async operations(network, system) | 2 | + |

| Immediate | queue for setImmediate(registered and executed as soon as JS picked it up) | 3 | + |

| Close events | queue for closing connection events(DB, TCP etc) | 4 | + |

| Empty loop ~= few milliseconds |

EventLoop is tracking how many ongoing tasks and when they are done, their count is decreased

- if equal to 0 loop is exited

setTimeout(..., 0) !== setImmediate, because EvebtLoop CAN(not always) be so fast, that timeOut won’t be registered at all for this cycle and will appear at next

EventQueue - MicroTasks

I/O Polling

- I/O events are added to their queue not when they are ready(as other events), but after I/O polling

Between each macrotasks we have JS execution period of time(same as before EventLoop), inside which we have micro tasks as process.nextTick(1 priority) Promise(2 priority), with their own dedicated queues

- there also others microtasks as MutationObserver, queueMicrotask etc

- microtasks are checked by V8, after all JS is executed

process.nextTick have the highest priority among all of others, BUT can block EventLoop and kill performance

microtask queues block EventLoop and must be cleared

in Node version < 11 microtasks was executed between queues and not macrotasks, but for web compatibility it was changed

EventLoop

EventLoop is not fully part of V8, V8 is only parsing and executing JS + microtasks, but macrotasks are done by libuv

EsModules

EsModules are async by design, so when we init our program, inside first EventLoop promises will have highest priority

libuv

I/O operations can be:

- blocking: file, dns etc

- require different thread

- non-blocking: network

- several tasks can execute in simultaneously

for blocking tasks libuv uses Thread Pool, so we can simultaneously execute this tasks on different threads(4 by default if any blocking operation is present in code) on top of that we have threads that occupied by default for garbage collection etc

DNS lookup is blocking, but can by made it non-blocking with some effort

Other

Custom promises(Bluebird.js)

- Can collet all promises into one macrotask to reduce blocking time

- Can executer promises on some other phase

EventLoop - Browser

Similar to Node.js EventLoop, BUT with addition to some DOM related tasks and with no strict order in macro/micro tasks queues

Most of the time JS engine doing nothing and runs only when some event, script, handler activates

Rendering happens between macrotasks and blocked by their execution

- some very heavy tasks can block whole browser(not responsive to user events), so browser might suggest to kill this process with page

Same as in Node microtasks queue empties in-between each macrotask

- microtasks are mostly come from promises, but can be also manually queued by

queueMicrotask(func)

macrotasks

- setTimeout

- setInterval

- setImmediate

- requestAnimationFrame

- I/O

- UI rendering microtasks

- process.nextTick

- Promises

- queueMicrotask

- MutationObserver

Other

- heavy tasks can be split into smaller once and wrapped into zero second setTimeout, that will not block DOM

- it is better to setTimeout earlier in code, because even zero second timeout has some delay in it

- you can show some loading via this split, because otherwise change in DOM will be shown after whole process is finished

Regex In JS

To create a regex in JS we can use new Regxp("pattern", "flags") or with /pattern/flags. This will resolve in Regex object

Methods on Regex object:

.test(string) -> booleancheck if string has a match.exec(string) -> array | nullexecutes regex and returns array of matching groupsarraywill include:- “0” - matched string

- ”groups"

- "index” - matched string index

- ”input” - original string

- it acts as an iterator and, when there is no left matches it return

null

regex flags(5 in-total, most important bellow):

g- search for all matchesi- case insensitive search

General Patterns

Patters provide common solution for different problems and serve as general guidelines There are list of JS specific or classical(with modifications) patterns

- Command - don’t execute methods directly, but create command interface with

executemethod and extend from it, by calling specific method with specific command- pros: decouple method usage from it’s user, command can be queued and reverted

- cons: boilerplate, complication of code

- Factory - create a method that will create class instances

- in JS we can also just create objects from giving parameters

- pros: creation of complex object(with backing env state into them), additional logic(caching, additional calculations), creation of objects with similar interface(but avoiding classes)

- cons:

classis mostly sufficient for this task(but class is still can be used with factory for adding additional logic over default constructor)

- Flyweight - split class’s data that is static(newer changes) and dynamic(can be changed often) and break into to classes, store “static” class in some cache, to prevent unneeded object creation(aka RAM usage) and reference it in “dynamic” class

- in JS we can also bind static and dynamic data via prototype inheritance

- pros: less RAM usage with large number of objects created

- cons: more complex code(in general you should only use it as optimization matter)

- Mediator - remove direct object communication and make them communicate(can be uni and bi directional) with mediator, that delegates all the work between objects and only processes request/result

- similar pattern is Middleware, that takes request, do smth with it and pass along the chain, where it can be taken by other Middleware or by receiver

- pros: less coupling between objects(makes many-to-many communication easier)

- Mixin - change inheritance with delegation, by passing mixin object with needed functionality to class and delegate tasks to it

- NOTE: passing functionality into class is more TS way, so to safely do so you need to specify type of this. Other approach is to modify prototype(not safe operation)

- mixin, by itself, can use inheritance

- only used to add functionality to other object

- example:

windowobject, that includes large number of different mixins

- Module - logically split code into independent, reusable chunks. With keeping parts of module private

- JS has many variations of modules, with newest and mostly recommended to use ESModules(import/export syntax)

- private fields prevent naming collisions

- other naming collisions can be prevented via

asrenaming, when importing some part of module

- other naming collisions can be prevented via

- ESM won’t pollute global scope(usually

windowobject) with exported parts of module - we can export some part of module as

defaultexport, which may be useful to indicate main functionality of module(for example react Component is default export, while it’s components, as not main part of module, are just exported) - module can be imported like

import someDefault from ""import {someExp1, someExp2} from ""import * as fullModule from "", where:fullModule.default->someDefaultfullModule.someExp1- note that this way is disregarded, because we might import unnecessary parts of module

- we still can’t import private parts of module this way

- when working with UI framework, it is a good practice to keep 1 module for 1 component

- when conditional or deferred import is needed we can use async

import("") -> Promise<module>to do so- often used as optimization matter

- unlike default imports, we can use template literals here

- transpiling is needed, to use modules in pre ES2015 envs

- basically the will merge modules into one file, with adding scopes via function wrapper

- observer - observer object is subscribed to observable object and notified each time, when some event in observable occurs

- great for working with events and async operations

- example: RxJS

- pros: separation of concerns(observable monitors events, observer handle function execution)

- cons: performance with too many subscribers

- prototype - share properties between objects(a way to do inheritance)

- native to JS(in pre classes we were basically managing prototypes by hand, now we have sugar syntax with classes)

- basically, when accessing an object method, JS will check object, if it has one, that it’s prototype and on and on and on

- as an example,

Object.create(proto)will create object, with assigning passed prototype to it

- as an example,

- it is possible, but disregarded, modify prototype on fly

- basically, when accessing an object method, JS will check object, if it has one, that it’s prototype and on and on and on

- pros: optimization(we only reference one object with needed methods)

- native to JS(in pre classes we were basically managing prototypes by hand, now we have sugar syntax with classes)

- provider - if data is needed in many places or somewhere deep in code, it is better to create provider, that will give access to the data, instead of passing it down via the props

- example: React Context

- in React, it is common to implement:

- helper hooks, that call

useContext(Ctx)and return value, with additionnullcheck, that throws, if it used outside context - HOC, that incapsulates logic of context and provides context value

- by doing both, we separate logic of getting and providing values from components

- helper hooks, that call

- in React, it is common to implement:

- use-cases: global data(current theme of an app)

- pros: avoid prop drilling(easier to refactor+read code, easier to find origin of value), global state

- cons: performance(consumers need to react to each change of context)

- to deal with performance, just don’t overuse context and break it into smaller one

- example: React Context

- proxy - add additional behavior to object, by wrapping it fully/partially in proxy

- basically, proxy adds layer of logic in front of method

- we can implement proxy or use built in JS

Proxyobject, that takes original object and config, that will change interactions with passed object- common config methods:

get(obj, property)set(obj, property, value)

- common config methods:

- other API to work with proxies is

Reflect, basically it is used as sugar to do standard operations easier, like:obj[property]->Reflect.get(obj, property)obj[property] = value->Reflect.set(obj, property, value)

- use-cases: validation, permissions, formatting, logging, debug

- be careful with performance

- singleton - allow only one instance of a class to be created

- implementation:

- generally just store nullable reference to created instance(as static filed), and return it, when user requests via separate method

- worst noticing, that it might be a good idea to freeze object, thus preventing accidental changes to it from outside

- also we can avoid class implementation at all and just create single object + freeze it

- in plain JS we need to

throwin constructor, if instance is no null - in TS we can make constructor private and create a factory to avoid throwing errors

- generally just store nullable reference to created instance(as static filed), and return it, when user requests via separate method

- use-cases: global shard state, optimization

- drawbacks: global state management problems(hard to test, unexpected behavior in parts that rely on this global state, data flow is unreadable)

- it is generally considered an anti-pattern(JS also accepts this statement)

- of corse we need global state in app, but it is better to use some pre-build solutions, that makes state read-only or implement proper mutation system

- implementation:

- static imports - default ESM

importis example of static imports pattern, which job is to give current module access to parts of other modules + include this parts in final bundle- static imports load data in locking sync manner

- islands rendering - allow to pre-render static parts of an app on server, with keeping dynamic parts as is(they can hydrate directly on client or via server-side rendering too)

- drastically improves performance(less JS shipped and ran on client) and CEO(all content is pre-generated) for an app, with keeping it interactive

- prioritization to content first and interactivity second

- better accessibility

- ABOUT REHYDRATION

- it is a process of binding into pre-rendered HTML with shipped near it JS on client

- differs from default hydration, by including pre-rendering step

- can be done in different ways:

- SSR - fully render on server

- Progressive - render part of an app, other parts are rendered and shipped as needed

- islands - break app into static and dynamic parts, ships static as is an dynamic with needed JS + do hydration

- hydration can be done in different moments of time:

- on client only

- on server + rehydrate on client

- island is self contained

- unlike SSR/Progressive rehydration, one component won’t block other, because their process of hydration is async

- hydration can be done in different moments of time:

- it is a process of binding into pre-rendered HTML with shipped near it JS on client

- how to do islands:

- render static content with no JS on server

- dynamic content must be filled with placeholders inside static content + rehydrated as soon as main thread is idle(requestIdleCallback)

requestIdleCallbackis used to implement scheduling

- allow isomorphic rendering, thus using same code to render on server and on client

- examples:

- Marko - automatically decide wether component is dynamic

- Astro - static site generator, that creates lightweight apps, that can use dynamic components from other frameworks. Allows lazy loading

- was built to work with islands with component based design

- Preact(static site generator) + Eleventy(isomorphic compatible components) - combination to do islands, with lazy loading

- use-cases: blogs, news, landings, e-commerce etc

- DRAWBACKS:

- not useful for highly interactive pages

- new topic(not many guides, patterns and practices are not existent yet)

- drastically improves performance(less JS shipped and ran on client) and CEO(all content is pre-generated) for an app, with keeping it interactive

- rendering - there are many modern techniques, when it comes to “where” and “when” to render

- NOTE: this section is not about general patterns, but kinda a vercel add ;)

- main way to choose is by finding best solution to your case, with consideration of UX and DX

- it will lead to: fast development, fast load times, low cost of processing

- remember that each pattern is designed to solve one issue with introducing other tradeoffs

- in general SSR or progressive hydration is a way to go, but they might be harder to cook, overkill or just not sufficient for some cases

- it might be a good solution to choose different patterns to different parts of an app

- MAIN CONCEPTS

- CWV(core web vitals) - set of optimization metrics to improve UX

- time to first bite

- time to interactive

- first contentful paint

- cumulative layout shift - visual stability of an app, to avoid interface shifts, when content is loaded

- largest contetful paint

- first input delay - time between user interacts and event is handled

- DX points to improve, so product is developed fast and smooth

- fast build times - build + deploy time

- easy rollbacks

- low server cost

- reliability of an app

- performance of dynamic content

- scalability(in both ways)

- CWV(core web vitals) - set of optimization metrics to improve UX

- PATTERNS:

- static rendering - render content on build and keep it as is

- used together with CDN(caching, faster request processing)

- TYPES:

- plain - render content on build and keep as is, with possibility to add dynamic components with rehydration

- pros: fast load times, CEO friendly, low cost

- cons: only for static content and pages that don’t rely on data

- with client-side fetch - render content on build with placeholders, that will be changed to dynamic components with rehydration, that will

fetchand paint data- pros: fast enough load times, CEO friendly, allow dynamic content

- cons: harder to scale, more server to keep running, content shift

- with server-side backing of the data(getStaticProps) - similar to plain, but with piping dynamic data to HTML, when building it

- similar to plain, but with slower build times

- also, we might need separate service for better separation of concerns

- it might be costly to do API calls on each build

- incremental static rendering - generate some pages at a build time, while other are generated, when user requests them

- we can easily cache the new generated pages

- for dynamic pages we need to set invalidate period, so next request will invalidate and trigger regeneration

- pros: fast enough load times, CEO friendly

- cons: high server cost(for very dynamic content invalidation is near instant, so non-needed invalidations might happen), might be harder to maintain, can’t be user specific, cached only on edge

- we can easily cache the new generated pages

- on-demand incremental static rendering - similar to incremental static rendering, but regenerations happens after event occurs and not invalidation happens

- pros: fast, cached CDN wide, CEO friendly

- cons: can’t be user specific, might be expensive

- plain - render content on build and keep as is, with possibility to add dynamic components with rehydration

- all in all, good solution for semi-dynamic pages with non-user specific data

- server side rendering - HTML is rendered on each request, that allows for highly dynamic pages, with user specific data and possibility auth

- in it’s core, each request produces custom HTML + with JS bundle send right after to do rehydration

- it is fast and easy to build, but pretty slow and costly

- also might need separate API service for separation of concerns(note that API call will block rendering, so it is better to keep it close or on same server)

- it can be optimized wit

- granular rendering of dynamic and non-dynamic parts(breaking app into islands)

- react server components to avoid shipping large dependencies to client

- static rendering - render content on build and keep it as is

- bundle splitting - modern JS code is often bundled together in large file, that can be slow to download and pars, which is blocking operation to render page

- it is useful to split parts of an app into separate bundles, making them load async in background, thus not blocking main thread

- it is a tradeoff operation, because we enable faster first load, but now need to wait for parts of an app to download, before user can interact with them

- it can be nicely done via

React.Suspenseor similar solutions to manage async state- it may be problematic to use with SSR, so some lib(like

loadable) can help here

- it may be problematic to use with SSR, so some lib(like

- note that additional bundler config may be required

- it is not possible to do auto bundle splitting, so it is mainly used with large libs

- it is useful to split parts of an app into separate bundles, making them load async in background, thus not blocking main thread

- animating view transitions - modern browser API to add transitions, between DOM changes(from small toggles to page changes), that is very useful for SPA apps

- from JS perspective we call

document.startViewTransition(cb), where callback will do changes- basically browser makes snapshot of DOM state before and after

cbcall, and then do smooth transition in between

- basically browser makes snapshot of DOM state before and after

- by default we will get linear crossfade with some default time, but it can be changed via CSS, like so:

::view-transition-old(root),::view-transition-new(root) {animation-duration: 2s;animation:...}- to do more granular actions, we can add groups via names like so:

view-transition-name: photo-heading;, to reference elements + specify containment:contain: layout;, which allows us to do partial animations, only on this groups - to do navigation animations, we can call

startViewTransitionon navigation, BUT return a promise, that will wait until re-render happened- this won’t block interface, when re-render or page fetching happens, but when promise resolves, transition is smoothly performed

- for snappier animations we can do alternative approach, by animating out first HTML, wait until new page loaded + animation completed, animate in second HTML, BUT this way we are loosing transitions between elements

- all in all, there are plans to do native animations between pages OR you can use some lib :)

- to do more granular actions, we can add groups via names like so:

- from JS perspective we call

- compressing JS - there are quite a few techniques to compress and split your bundle, so here are some strategies to do so with pros&cons(some can work against each other)

- JS is largest, after images, by contribution to page size, so it is important to keep an eye on it’s size

- general info

- mathematically, compressing one big file will make final size smaller, that breaking and compressing it parts

- when bundling and serving files use gzip or brotli(better both, so users with newer browser will get better, brotli compressed, data, when still leaving gzip option for older browsers)

- browser will always tell what it supports via

Accept-Encodingheader, while server usesContent-Encodingto indicate one- using this headers you can also see, are really files compressed

- this will be also told by LightHouse report

- using this headers you can also see, are really files compressed

- browser will always tell what it supports via

- HTTP compression - compress data to take lower space

- lossy - some data is lost(reasonably), with higher compression, which is great for media files

- lossless - data is compressed into less size, but can be precisely restored back

- done with gzip, brotly etc on build OR serve step

- doing on build will result in slower build times, but lower server resources usage and higher compression

- you can even do both, but it might be not really useful

- minification - reduce file size, by removing unnecessary data(whitespaces, long variable names etc), while keeping code valid

- common for JS and CSS, but can be used with other files, like HTML etc

- as a good practice, library author should provide minified

.min.jsfile - done on build step with some tools(pick most popular one ;) )

- bundling and splitting code - we can pack together or split or JS code to load all at once, or by some chunks

- about:

- module - encapsulated part of program

- bundle - modules packed together, after compilations

- bundle splitting - breaking bundles into separate parts, for better caching, isolate publishing, deferred downloads

- chunk - part of splitted bundle

- it is important to have always request parts be in base chunk and other are packed together with ranking of needability

- all related code should be bundled together

- pros/cons:

- larger chunks will take less total space

- smaller chunks will be better cached

- if action results in another chunk loading, actions will take longer time than it should

- all in all, recommended size is around 10 chunks, but you can break some heavy dependencies or parts of an app into separate chunks

- it is considered best practice to move 3d-party dependencies into separate chunks

- about:

- import on visibility - pattern to defer load of some data, to point when it becomes visible

- it can be done to static files like images, API calls(infinite scroll) or even parts of bundle via some libs(

react-lazyload,react-loadable-visibility) or hand rolled- all of it is based on

IntersectionObserver API

- all of it is based on

- don’t forget about tradeoff, that now user needs to wait until something is loaded

- it can be done to static files like images, API calls(infinite scroll) or even parts of bundle via some libs(

- prefetch - pattern to fetch some data in background, that might be used in future(result in near instant interactions with “non-loaded” data)

- ways to achieve:

- HTML:

<link rel="prefetch"> - HTTP:

Link: </js/chat-widget.js>; rel=prefetch - Service Workers

- some custom solution with bundlers

- HTML:

- don’t over prefetch, it may cause slowdowns, only do when user will most likely need that data

- a good rule of thumb is to disable prefetch(if possible) for low tier devices

- ways to achieve:

- import on interaction - some heavy parts of an app(often 3-d party) can be loaded async, when user interacts with component, that depends on it

- if we initially load resource, it will block the thread, so we can make it load in deferred way, BUT it will slow down interaction with this component, so don’t overuse this technique

- ways to do:

- Eager - default way to load right away

- lazy on route navigation

- lazy on click

- this is good alternative to SSR, which can lead to poor experience of page loaded, but not yet intractable, WICH won’t happen to lazy click, because we can interact with page, it just takes a bit longer to download smth

- note that more complex technique includes tracking user clicks and replaying them, when JS is loaded+inited

- we can also start loading on hover, but it maybe more resource draining

- by doing it async, data that needed by the components will also fetched in async

- this is good alternative to SSR, which can lead to poor experience of page loaded, but not yet intractable, WICH won’t happen to lazy click, because we can interact with page, it just takes a bit longer to download smth

- lazy on scroll into view

- prefetch - load after main thread, when idle

- preload - load as soon as possible

- for your code it is better to do prefetch

- exception is cases, where user can click on something, before it is prefetched

- with 3-d party code you can do it in any point of time

- good technique to utilize is some facade component, that acts as placeholder with bare functionality of original code, that loaded in async manner

- example:

- video player can be facaded with image and play button and when user clicks, it goes into loading state and replaced by actual player

- login button that loads SDK separately

- chat button that waits until users needs to chat

- example:

- how to implement:

- dynamic async import in JS

- alternative is to dynamically inject JS

<script>into page

- alternative is to dynamically inject JS

Suspense+lazyin React- to break bundle itself proper bundler config is needed

- dynamic async import in JS

- one more technique is to replace embedded code with some static(generated progressively or on build time based on some data) with link to more interactable version

- optimize your loading sequence - app performance and WebVitas passage is heavily influenced by how/when we load files

- what can cause poor loading times:

- requesting resources in wrong order(web vitals are estimated in specific order)

- underutilizing CPU/Network

- example: if loading files in parallel, CPU will start processing JS much later, then doing it sequentially

- heavy 3-d party libs

- resource optimization

- inlining CSS, bundling&splitting JS, several image sizes(depending on platform, low-quality placeholders), removing dead code

- JS can also have additional problems with: poor written modules that can’t be tree-shaked, unneeded ES5&Polyfilling

- inlining CSS, bundling&splitting JS, several image sizes(depending on platform, low-quality placeholders), removing dead code

- what can be done:

- inline critical CSS for better FCP OR at least serve it from same origin as HTML with preload

- for 3d-party CSS you can create a proxy

- don’t over-inline, because it causes slower HTML parsing and disables caching

- fonts should be requested as soon as possible, with

preconnect- most common solution is to provide font-fallback, but be careful with jumping layouts

- for images use low-quality placeholders, with same visual size, but lower resolution(be careful with making it too low and not trigger LCP)

- if image is not visible, use lazy loading

- for JS:

- be careful with sync 3P scripts

- inline critical CSS for better FCP OR at least serve it from same origin as HTML with preload

- what can cause poor loading times:

- tree shaking - process of removing any unused code from bundle

- unused code means code that don’t used anywhere else or parts of code with no side effects

- usually done automatically be bundling system, but can be tricky in some cases

- general process of doing is to parse code into AST and travers it to determine

- EDGE CASES:

- only ESM modules can be tree shaken

- when module is imported it is executed and if such execution contains any side effects(global css or scope modifications etc) it won’t be shaken

- preload -

<link rel="preload" />allows to prioritize load of one resource over others, no mater where it is located in document- such resource might block any other load, so be careful of overusing it

- still it can be a great way to load critical resources upfront + in parallel, if done like so:

<link rel="preload" href="emoji-picker.js" as="script" /> <script src="emoji-picker.js" async />

- still it can be a great way to load critical resources upfront + in parallel, if done like so:

- notes:

- HTTP preload header has higher priority over script tag

- font is best candidate for preloads

- img preload has lowest priority

- be careful with preloading script, that is a part of dependent(not preloaded) script

- server need to server preloaded content with priority too

- such resource might block any other load, so be careful of overusing it

- optimize loading 3d-parties - there are set of best practices for loading external resources

- where slow-down comes from:

- calls to 3d-party server

- larger resources(bulky JS, unoptimized images etc)

- resource influences page in unpredictable way

- blocking behavior included into resource

- to find pain points use Lighthouse, WPT and Bundlephobia

- ways to fix:

- remove resource OR replace with lightweight alternative

- optimize how/when resources are loaded:

- async/defer for non-critical scripts

- note that it will lower browser priority for resource

- it may be good to even defer some scripts to the point, when page becomes interactive

- establish early connections to required origins using resource hints for critical scripts, fonts, CSS, images

- lower time for getting resource by dns-prefetch or preconnect(includes dns-prefetch)

- lazy load non critical or out-of-view resource

- use

loadingattribute with iframes - use some lib or custom Observer API implementation to defer loading

- use facades(self written or 3d-party implementations) + load on interaction

- be careful with sizings and layout shifts

- use

- self-host JS scripts and fonts to lower request trips(no need for TCP handshakes and DNS look-ups)

- it is also possible that 3d-party have poor caching or compressing

- great deal when using with HTTP/2

- consider using CDN as storage

- don’t forget to update your resources from time to time

- use caching via service workers if possible

- enables better control over cache and offline mode

- lowers need to self-host

- don’t use resources like Google Tags, reCaptcha etc, on pages, where they are not needed

- you can even defer their load, on pages where they are truly used

- with Google Tags you must not load it, when user denies Cookies

- async/defer for non-critical scripts

- there is a great implementation of

<Script>component in Next.js, that gives you out of the box optimizations or some libs like Partytown, that do it in agnostic way

- where slow-down comes from:

- list virtualization / windowing - render only part of list/content and change this rendered part onScroll, rather then rendering full list at once

- all in all just use some lib to handle it, because it is quite tricky

- and I just don’t like the general UX with problems like

cmd+fsearch, that related to it :/

- and I just don’t like the general UX with problems like

- in its core creates one tall DOM element, that roughly the height of

elementHeight*numberOfElementsand small DOM element, that have scroll overflow and changes what elements are renderd- this results in massive performance boost, when talking about ginormous 1k+ lists

- it is also possible, but less common, do windows for 2D layouts

- also great in combination with infinite scroll

- user won’t lag himself, by scrolling to long

- there is more native approach with CSS’s

content-visibility:auto, that acts as optimization matter for out of view content, but it is still better to do virtualization for dynamic pages(it is just more efficient)

- all in all just use some lib to handle it, because it is quite tricky

Open Social

Open Source software is common standard nowadays, which might be great example for social networks AND data in general and became an inspiration for AT Protocol (atproto)

Common view of the web - each party has it’s own data, enclosed inside their websites, while still can be shared and co-linked by other websites

- in such model you can host your data via any provider AND don’t depend on them, because you have stable addresses

- BUT most often data is published into other party’s website, thus you not only loose data ownership, but also vendor lock yourself to it

- and Close Social with our current social media apps emerge

- it has benefits:

- data can be turned into different representations

- data has single source, thus global search, ML, feeds, notifications etc is enabled

- Close Social creates tight social space

-

If you can’t leave without losing something important, the platform has no incentives to respect you as a user.

- you can’t even export data (technically you can, but it won’t be meaningful without other parts of social graph and representation)

Open Social

- each user will have domain-like identifier, that bounds data to him

- data is stored in repo and server as signed JSON and could be freely moved from one vendor to other, while still preserving graph integrity

- basically we have same web structure, BUT not in form of hyperlinks, but it form of linked JSONs

- other services can be integrated in this system, but letting them create records in your repo

- so you can interact with any system, BUT you can also represent and use their data in different formats

- services can easily interact with one another without need for API

- this leads to possibility of forking OR creating analogs of products

- to query & aggregate data efficiently you can use websocket (with single user OR aggregated socked with data about many users) and clone data into your DB

- all traffic is cryptographically signed, so you can verify it’s legitimacy

at://

The technical details on how atproto works and resolves it’s URIs

- main part of at points to user, after which we specify format and some query

- this means that we need to some steps to locate where data is physically hosted

- resolutions:

- handle -> identity

- handle might change, BUT identity can’t and acts as stable point, so multiple handles can resolve to single identity

- you get identity by handle via DNS or HTTPS, BUT given identity need to verify back that it truly associated with given handle

- this means that you should store identity links, not human readable links

- to verify handle relation you identity provides data with list of owned handles

- it will also point to server address with data (identity -> host)

- you can have identities in

webformat OR inplcformatwebis standard, decentralized one, which creates risk of loosing access to your identityplcis on of vendor locked format, that acts as registry, thus has less decentralization

- host -> JSON data

- just combine server address with data from URI and query normally

- handle -> identity

- you can just use SDK to do resolutions for you, BUT account that ideally you should hit local OR remote cache instead of DNS first

You don’t know JS book

I’ve also had many people tell me that they quoted some topic/explanation from these books during a job interview, and the interviewer told the candidate they were wrong; indeed, people have reportedly lost out on job offers as a result.

Naming and Spec

JavaScript is named after Java as branding for “web Java” and name still owned by Oracle JavaScript/JS has official name ECMAScript(ES) and it is also a standard for how to implement JS(browser, Node.js erc)

TC39 is tech committee that decides on new changes and later addresses them to ECMA

- TC39 is managing open source proposals too

- every proposal are gone gone through 0-4 stages

JS engines (ideally) fully implement JS spec

Spec has appendix B that includes some historical inconsistencies in browser JS spec

Some methods like alert, console.x, fs.write etc aren’t in spec, but universal-ish in JS environment

JS is muliy-paradigm lang

Updates

JS is backward compatible(new changes don’t break old code), but not forwardcompatiable(new changes can’t be run in old version)

- HTML/CSS are opposite

To address forwardcompatiable problem transpiling(babel is most popular) invented

- transpiling - converting new syntax to old one, like

let->var

Transpiling won’t help in new-new-API case, so dev can write polyfill(wrapper for new API that implemented with old APIs)

- there are library of official polyfills

- often babel can add polyfills

Interpretation

There two polar types of langs: interpreted and compiled, BUT it is not binary and rather a spectrum

- interpretation works by executing code line by line

- any errors are throw in run time

- compiling works by converting code to Abstract Syntax Tree (AST), analyzing for static(syntax, type etc) errors, then to binary code and then executed

JS has a parsing step and kinda compiled, but not fully

- kinda, because after parsing it has conversion to optimized binary code(binary intermediate representation), that can be executed by virtual machine aka JS engine(similar to Java) JS also has Just-In-Time optimizations on BIR(post parsing)

WebAssembly(WASM)

In it’s core WASM is tool to convert some lang to binary representation of JS, that can be read by JS VM without need in additional optimizations

It is also suitable like general purpose VM

- ironically it is hard to WASM to convert JS itself because of lack of type-safety :)

Strict

Optional(highly enforced in new code bases) list of additional rules to make JS safer and cleaner

- best-practice is Strict + linter + prettier

- de facto is default but technically not for backwardcompatability

some of strictness comes in form of new Static errors

in strict this defaults to undefined

turned on with "use strict"; on first line per file

- can be not on first line if there are comments/blank space above

ES6 modules enforces strict

Files

Each file is a program, but executions helps them to organically communicate, by mixing them in a runtime

- it is done, so if one file throws, other will still operate Seamless communication is achieved via global scope, BUT after ES6, we can make files to be scoped(modular)

Values

- primitive

- string - ordered collection of chars, can be defined with quotes(` ’ ”)

- ` - doing interpolation on string, aka resolving some value into string

- boolean

- number

- bigint

- null

- undefined

- symbol - unguessable, uniq value, that can be used as object key

- created by Symbol(“string”), BUT Symbol(“string”) !== Symbol(“string”)

- string - ordered collection of chars, can be defined with quotes(` ’ ”)

- objects

- array - special type of object for ordered, numerically indexed collection of items

- object - unordered, keyed collection of items with string keys

typeof tells type, BUT with some catch :)

typeof 42; // "number"typeof "abc"; // "string"typeof true; // "boolean"typeof undefined; // "undefined"typeof null; // "object" -- oops, bug!typeof { "a": 1 }; // "object"typeof [1,2,3]; // "object"typeof function hello(){}; // "function"coercion is converting between types

value can be literal(declared in place) or stored in some var

Var, let, const

junior interview be like

| var | let | const | function | |

|---|---|---|---|---|

| mutability | + | + | -* | - |

| block scoped | - | + | + | - |

| hoisting | + | - | - | + |

* - if const is a pointer, it’s referenced value still can be changed, BUT it can be const via TS as const |

Functions

We can call JS functions as procedure - collection of statements that can be invoked one or more times, that has output/input

function name(){}- function declaration, with name to func mapping on compile stateconst name = function(){}- function expression(function is assigned to to var as expression), where function is associated with it’s name on runtime

JS functions are treated as values(or special type of Object) and can be passed around as in Functional Language

- function in JS is first-class value

Func can receive 0-infinity parameter(local to function vars)

Func can be set as Object param

Comparisons

- strict equality

===- compare two values, without possibility of converting types- type equality is always checked, but in === it is strictly must be the same

- NOTE

- 3 === 3.0 // true

- NaN === NaN // false

- Number.isNan(NaN) // true

- Object.is(NaN, NaN) // true

- 0 === -0 // true

- Object.is(0, -0) // false

- {} === {} || [] === [] etc // false

- because it is pointers comparison(opposite to structural equality, where we compare content)

- coercive comparisons OR loose equality

==- compare two values, with converting to same type if possible- agreed to be dangerous to use

==and===do same value comparison- tend to do primitive numeric comparison

- can’t be avoided, because

> < >= <=are also coercive :)- two strings will resolve in dictionary-like comparison

Code Organization

- classes

- class defines a “type” of custom data structure that includes data and behavior

- class is not a value, but value can be got from class via

newaka class is instantiated- methods can be called only on instances

- inheritance is JS is done via

extends+super(), so we can define common parent and extend it’s functional - JS classes also have polymorphism, because they allow children classes to override existing methods

- modules

- have same goal as classes to combine data with behavior, with additional possibility of module interactions

- Classic Modules

- it is similar to classes, but we are creating a function, that incapsulates data and returns some object with methods to interact with data inside

- factory pattern

- it is similar to classes, but we are creating a function, that incapsulates data and returns some object with methods to interact with data inside

- ES Modules

- wrapping function is changed to wrapping file that incapsulates all data and

exportsall behaviors - we can say ESM is a singleton, caze it’s instance created on first

importand after that other imports receive a reference

- wrapping function is changed to wrapping file that incapsulates all data and

Iteration

It is important to have consistent method to work with large quantity of data, that’s why we have iterator pattern, that can iterate through some set of data and stop iterating at some point

there is next() method in JS, that returns object named iterator result, which consists of done: bool and value: any, where done indicates, that iteration of set is finished

for of is a syntax to consume iterators

... - spread and rest operator

- SPREAD

- this form of operator is an iterator consumer

- we need some place to spread data into(array, function call)

iterator was created as base for iterable values aka value that can be iterated

- iterator instance created from iterable on demand

- string, array, map, set etc - iterable, so we can do smth like this:

const greeting = "Hello";const chars = [ ...greeting ];

chars; // [ "H", "e", "l", "l", "o" ]- map has iterator that returns tuple value in a form of

[key, value].keys()gives us a list of keys.values()gives us a list of values.entries()gives us tuple, but for any iterable(for array it will be:[index, value])

Closures

Closure is when a function remembers and continues to access variables from outside its scope, even when the function is executed in a different scope.

objects don’t have closures, only functions

common closure use-case - async functions, when we close some data inside function, that is executed after some time, but still remembers data

This

Function has two characteristics:

- scope - attached to function via closure, represents a list of static rules, that controls resolution of references and values. It is attached, when function is defined

- hidden inside JS engine

- context - similar to scope, but attached on call stage and can be accessed via

this. Context is dynamic and can differ from call to call- object, that has properties, that made available for function

- this aware function - function, that depends on it’s context

if this aware func is called without strict mode, it will look to it’s context and then to global window object, in order to resolve value

WAYS TO MAKE THIS AWARE FUNCTION:

- call function as object method -> this === object

- function.call(obj, arg1, arg2) -> this === obj

- function.apply(obj, [arg1, arg2]) -> this === obj

- const f = function.bind(obj) -> this(inside f) === obj

classes are heavily based on this

Prototype

NOTE: JS is one of not many, who give access to direct object creating

this is characteristics of a function and prototype is characteristics of an object that helps to resolve property access

- basically prototype is hidden link between two objects

- prototype chain - series of objects linked via prototype

- it is called “behavior delegation”

- prototype helps in delegation(inheritance) of methods

- by calling some method on current obj, JS will try to find it on this object first, than on it’s prototype in chain and return first occurrence || undefined

const newObj = Object.create(obj) - safe way to set newObj.prototype to obj

- if obj is null, newObj will have no prototype(event Object.prototype)

this highly benefits from prototypes. It is dynamic in JS and can resolve from different objects. Example:

var homework = { study() { console.log(`Please study ${ this.topic }`); }};

var jsHomework = Object.create(homework);jsHomework.topic = "JS";jsHomework.study(); // Please study JS

var mathHomework = Object.create(homework);mathHomework.topic = "Math";mathHomework.study(); // Please study Mathas we can see this.study resolves from homework, but this.topic still from object itself

Scope

Scope is well defined list of behavioural rules about variable and how engine interacts with them

Lexical scope model

- scope is a block of code in which variable can be accessed

- can be nested

- variables from higher scope can be accessed, but not from lower

- how variables are placed determined at parsing/compiling stage

JS is has lexical scope model, BUT with some differences:

- hoisting - variable can be declared at any place of scope, but treated as it is declared at beginning of it

- var-declared - by declaring variable with

varinside the scope it is accessible outside a block - temporal dead zone(TDZ) - by declaring variable with

const/letthere is a part of program, where you can try to call in-scope variable, but it is not accessible yet, because it is not declared- result in

ReferenceError

- result in

let x = 10;if (true) { console.log(x); // ReferenceError: Cannot access ‘x’ before let x = 20; // decalre}Why JS Uniq

Prototype, Closure+Scope, Types+Type Equality

Values and References

In JS type determines if our variable will contain value or reference

Primitives are always passed as copies

Object values(like functions, arrays, objects) are stored as reference, so we can pass and copy the pointer, but not value itself

Function forms

We have function declaration and function expression(setting func to var)

Function expression can be done with anonymous function, BUT it and some other functions will still have .name property, reflecting declared OR variable name

- mainly used for stack trace

- function expression’s name will be

""if it is passed as an argument - function is still anonymous because it can’t refer to itself,

.nameis just metadata

We can also declare var with named function expression(name of var and func can be different, BUT .name will be from expression)

Generator function can be declared like this:

function *generator(){...}Generator function - function with between-call state, that can finish it’s execution

- main usage - write some iterable data structure example:

function *gen(){ yield 1; yield 2;}

const g = gen()g.next() // {value: 1, done: false}g.next() // {value: 2, done: false}g.next() // {value: undefined, done: true}

gen().next() // {value: 1, done: false}Also there are arrow functions, Immediately Invoked Function Expression(IIFE), async variations, class/object methods(not different from function in JS terms)

- arrow functions are anonymous by design

- key difference is that arrow function is referring to

thisfrom place it was declared AND NOT from place it was called, so it kinda closer to scope and perfect for callbacks inside methods

showSkills() { this.skills.forEach(function (skill) { console.log(`${this.name} is skilled in ${skill}`); // this.name === undefined }); },Coercive in conditionals

if, while, for, ? : have different from === or == comparison type, they are doing conversion to boolean each time, so:

// if("hello") === if(Boolean("hello") == true)So we still meat coercive comparison, BUT in a way of just type transformation to boolean

Prototypal “Classes”

Aka classes before classes

It is old syntax, but classic for JS and it looks like this:

function A() {}

A.prototype.hi = () => console.log("hi");

const a = new A();mathClass.hi(); // hiIt is possible, because all functions in JS refer to empty object as prototype, that will become prototype of objects, created from function via new

Practice 1

- comparison

str.slice("")can be changed withstr.match(regex)"07:15" < "07:30" // true- example of valid string alphabetic comparison, that can be used to compare time, if time is in valid format

Code compiling

We can break compiling into 3 main stages:

- tokenizing/lexing - breaking up string of chars into meaningful chunks(tokens)

- lexing is figuring if symbol is token itself or part of other token

- parsing - converting list of tokens into Abstract Syntax Tree(AST)

- code generating - different for every platform/language. For JS it is converting AST to machine instructions, that will execute

In-depth JS engine is deeper, because it has optimization(while compiling and on-fly), lazy comping etc

- this is needed, because JS compilation needs to be seamless and fast, because we aren’t fully compiling like in C++

JS can be broken into compiling and execution, because of syntax errors, early errors and hoisting

to handle variables compilers break them into two types:

- target(variable is a target if value is assigned to it)

- examples:

const a = 53for (const a of aa)a(53)- assign 53 to some argumentfunction a() {}

- source(opposite to target)

Modifying scope on-fly

It is impossible to do this in strict mode and dangerous to say the least, but can be done with:

eval(string)- compiles and executes string inside as JS code at runtime, with modification to scope on-fly- modifies scope, that eval was executed in

- BAD BECAUSE

- code injection, unexpected behaviour, performance degradation(re-compilation on every function call)

with(obj)- turns object into function like scope, with properties converted to scoped vars- BAD BECAUSE

- readability, performance degradation(scope is dynamic, so dynamic re-compilation is present)

- BAD BECAUSE

const obj = { a: "A" };

with (obj) { console.log(a); // A}Lexical scope

lexical scope is determined by placement of functions, blocks and variable declaration in relation to each other

varis associated with nearest function, butconst/letwith nearest block({})

variable must be available from current scope or lexically available/outside scope, otherwise usually error will occur

compilation don’t allocate memory or anything else with variable data, INSTEAD compilation create map of lexical scopes, so JS identify and not create a scope

Details

- variable is always connected to scope, that it was created in, not the scopes it can be accessed

- properties are not vars, so they aren’t connected to scope

- scope is fully contained in other scope, never split between two/more scopes

- if we are referencing the value(not declaring it), JS performs a look up, trying to find our var in current/outer scope

- but it not performed at runtime, it mostly already known at that stage

- var declaration like this

var arr = [];can be seen as 2 step proses:- Compiler sets declaration

- Engine looks up variable, initializes it and assigns variable

- each scope will get new ScopeManager instance

- each scope has identifiers registered at the start of it(hoisting)

- each var in function scope will be associated with this function

varis always initialized at start of scope, butlet/const- not

Lookup failures

Happens if no scope is left, but variable is still not found Different between strict/normal modes, as well as between role of var(source/target)

unresolved source always trows ReferenceError, but target will throw only in strict mode

- often

ReferenceErrorlooks like this:Reference Error: XYZ is not defined.and means that var has no declaration

undefined often means that var was defined, but have no variable at the moment

- NOTE: typeof empty declared and undeclared variable is always

"undefined" - in non strict mode, if variable is target(we try to set it to something), it will be declared and set to that data in global scope

Scope Chain

Connection between scopes, which determines lookup path

Lookup process is kinda conceptual, because all metadata needed is determined during initial compilation

- this information is immutable

- this metadata stored in/near AST and used at runtime sometimes lookup is still needed, when scope can’t be determined at compilation

- it happens when variable is declared in other file, that yet not processed, so such variables stay undetermined, until deferred lookup

- lookup failure still will happen, if scope is undetermined and variable referenced in execution

Shadowing

Basically having two same named variables, but in different scopes (one variable shadows other)

to consider, when shadowing, all inner scopes will loose access to shadowed variable to

un-shadowing(not recommended)

- only way is through global/window object

- possible, because

var,functiondeclaration on global scope will create getter+setter on global object, that works as mirror to our variable- add property to

globalwill also create variable in global scope

- add property to

- possible, because

note

letalways shadowsvar, butvarcan only be shadowed if it is inside function NOT a block- otherwise - syntax error, because of hoisting

- named function declaration

const a = function b() {}will create not hoisting variableain outer scope and varbin function’s inner scopebis always readOnly(in non strict mode, assignment will fail silently)

- arrow function behaves similar to function expression scope-wise(even without

{..})- but remember, that it is anonymous by design

Two files, one program

Several files can be stitched together in this ways:

- ES modules - each file loaded separately, all needed functions are imported as references to files

- no shared scope

- Bundling - bundler concatenates all files into one

- usually each file is enclosed as one function, with exported methods as function methods, that can be accessed via shared scope(that also can contained in function, like “application” scope)

<script>- each JS file imported via script tag which is done with bundler or by hand- files can be still concatenated, but without wrapper function, or they can be loaded in default way, where each file is independent, but they share global scope and communicate through it

Global Scope

Global scope is place to several modules to communicate

Also this is a place, where JS exposes it’s APIs, primitives, natives etc

- also environment exposes it’s APIs(console, DOM etc)

- note: node has global scope, but it’s APIs technically not exposed there

Global scope is glue for JS apps, but not a dumpster field

Each JS environment handles global scope bit different

windowfunctionandvar, declared on top level of an app, will appear on window object- top level variables shadows any global scope variables(properties of

windowobject) - any DOM node with

idwill create global scope variable with the same name, which contains reference to this node- legacy behavior, not recommended to use

windowobject has predefinednameproperty, which is getter/setter and always astring

- web workers - browser API, that allows to run JS in separate from main JS thread

- limited in browser APIs and have restricted communication with main thread, to avoid race conditions

- instead of

window, web workers haveself - same

varandfunctionbehavior

- dev tools - process JS code in no-separate environment

- behaves differently and less strictly, in comparison to usual JS, to favor dev experience(DX)

- some errors are relaxed and not displayed

- behavior of global scope

- hoisting

let/constin top level scope

- code is been executed in emulation of global scope

- not good enough to verify complex JS behavior

- behaves differently and less strictly, in comparison to usual JS, to favor dev experience(DX)

- ES Modules(ESM)

- top level

functionandvarwon’t create any global property- we can imagine it in a way, that all our code is wrapped in a function, so we can access global global scope, but not in a classical JS way

- all communication with outer files are done via

import/export

- top level

- Node

- Node treats all files as modules(ESM or CommonJS)

- top level of file is never affects a global scope

- all code is wrapped in function, that exposes some APIs to it(like parameters)

- to assign global property Node exposes

globalobject, that is reference to real global scope

- Node treats all files as modules(ESM or CommonJS)

global scope object can be get with

const glob = (new Function("return this"))();- function can be constructed from string and run in non-strict mode

thisof such function will be global object reference

globalThis is introduced as standardized universal variable, that will always reference environment’s global scope object

- not completely useful, because of old versions in-compatibility

Hoisting

function declaration and var variables can be accessed from beginning of function scope due to hoisting

- note, function hoisting includes function initializing and setting a reference to a function, BUT

varcreate anundefinedplaceholder and later fills it with data - to be specific,

let/constalso hoist to top of block scope, but they have TDZ of unusability

hoisting happens at compile time, where functions are hoisted first and then variables

Re-Declaration

re-declaration of var variables will do nothing, if we aren’t explicitly setting new value

with let/const re-declaration is not allowed and will throw SyntaxError

- even if re-declaration uses

varit will still throw - it has no technical reasons to be so, just stylistic

note, re-declaration is not happening in loops, because:

- each loop iteration creates a new, clean scope

- var will hoist out of loop and be just re-assignment

- it also true for

forloops, we can conceptually say thatiis declared per loop, but program keeps track of currentivalue via other scoped variable like this:- note, it won’t work with

const, if you will re-assigni

- note, it won’t work with

{ let _i = 0;

for (; _i < 3; _i++) { let i = $$i; ... }}Const

empty const declaration will throw SyntaxError, because re-assignment is impossible and will cause TypeError

- important that re-assignment will throw a run-time error

TDZ

Means that variable is exists, but not initialized, so we can’t use it, event if we try to declare them like this:

a = "a"

let a;happens, because compiler is instructed to initialize variable on line 3

- if function referencing variable and called before it’s initialization,

ReferenceErrorwill be thrown

good practice to put const/let as high as possible in block to avoid TDZ

Scope Exposure

It is good practice to lower scope exposure and make program functions with least amount of open data to make it more secure

It is bad idea to use global scope only because of:

- name collisions

- unexpected behavior(it is generally bad to expose private function, because it may be used unpredictable)

- dependency problem(others may depend on your private API and any change may cause big refactor)

Scoping with functions

We can limit scope via IIFE(Immediately Invoked Function Expression), so it be block-like, but also working for var/function

- note: IIFE must always be surrounded with

() continue/breakwon’t work inside IIFE for outer loopthisis re-binded inside IIFE

Scoping with blocks

{} will create a block, but scope will be created only if some variable is declared inside

- object literals aren’t blocks

classdeclarationfunctionbody is a statement with function scopeswitchdeclaration

catch is block+scope, with block scoped parameter, which is optional

function declaration inside block is block scoped by TC39, BUT in browser environment it will be function scoped, with initialization on block execution(so undefined by default)

- this leads to conditional function definition :)

if (a) { function b() { console.log("a is true"); }}else { function b() { console.log("a is false"); }}Closure

- only relevant to functions/class methods

- function must be invoked in different scope

- based on lexical scope, but observable at a runtime

we can say that closure is reference from inside of a function to variables, from different scope(basically function is enclosing that variables)

- closures are also created for pointer functions

- closure is not a snapshot, but editable

interesting point, that by using var declaration for i we are getting shared enclosed i, so it will be equal in all closures, BUT with let we will have separate, re-declared variables

common usages

- async

- callbacks

- handlers

- remember some information, by computing it once and enclosing

- partial implementation

- currying

in theory we can say, that there is no need to enclose global scoped variables, unused variables etc as optimisation matter

- but there is need to account for

eval()etc

alternatively view to closure is that our function is stays in place, and reference to it is passed, so enclosed variables are just simply accessed by function

Closure lifetime

It is important that closure can cause memory leeks, because garbage collector can’t pick up variable, that been closed over

good practices:

- always unsubscribe event listeners/other cb based functions

- manually unset variables(set to

nullto discard some large objects), that are not needed anymore(not really practical, but can be useful in some optimizations)

Module pattern

main way to organize code in JS is to break code into small, independent modules, that encapsulate some data/private methods and expose public

basically module is just a collection of data, private/public functions

types:

- namespace - group some independent(by state and purpose) functions together, in common namespace, like “utils”

- data structure - group data and functions together, without access control

- modules - data + functions + access control, but it is a singleton by design

- module factory - function, that creates modules

- OTHER

- CommonJS - module, that created on per-file basic

- public API is added via assigning something to

module.exportsobject - module can be imported with

const obj = require("path")- if

"path"is not absolute, Node will try to look atnode_modulesfolder and assume, that file hasjstype

- if

- public API is added via assigning something to

- ESM - module, per-file basic. Similar to CommonJS, but always in

strict modeimport/exportkeywords are used to import/export APIsexport- must be on top level scope

- can be placed before variable declaration

- via

export defaultwe can make imports easier(no need to de-construct APIs from object)- non-default exports are named as “named exports”

import- only top level scope

- ”named” export can be re-named with

as - ”namespace” import is done like this:

import * as A from ...and allows to collect every named export into some object’s properties

- CommonJS - module, that created on per-file basic

Scope deep dive

non obvious scopes:

- function parameters scope

- simple parameters won’t create their own scope, but: default values, rest parameters and destructed parameters - will, some examples and corner cases:

function k(b = a, a)will cause TDZ errorfunction k(a, b = () => a)will create parameter and function scope(for default valuea)varwill initialize to function parameter value and notundefinde- so it is not recommended to shadow parameters

- simple parameters won’t create their own scope, but: default values, rest parameters and destructed parameters - will, some examples and corner cases:

- function name scope

- if we declare function like this:

const a = function k()..., function namekwill create it’s own scope, “between”aandk, so we can still shadow it

- if we declare function like this:

Functions naming

Naming functions is good for:

- stack trace debugging

- self referencing(recursion, un-subscribing from something)

- easier to read but can make code uglier, because arrow function are always anonymous :(

NOTE: don’t make any scene if any additional code processing is used

function is not named, when it is passed as value to some variable, or object method

- to be sure, function won’t have real name, rather it will take name of method/variable name, so it’s name will be “inferred”

- this won’t give function a name:

config.a = function(){}

- this won’t give function a name:

hack to name IIFEs - name by purpose, that is usually to wrap some data, so: StoreSomeData

- main way to define IIFE is to: wrap it in

(), use+,!,~(it will work as(), by making JS engine evaluate function), or use explicitvoidoperator, that evaluates and returnsundefined

TDZ origin

TDZ originates from const, because:

- it is strange to first part of block be shadowed and other part not, so we need to hoist our

constdeclaration to top - we can’t auto-init

consttoundefined, because it won’t be constant

let got TDZ behavior to be similar to const

Callbacks

- async - some function(part of code), that was suspended and will be invoked later

- sync - some function, that passed as reference to be immediately invoked in other part of program

- base for

- Dependency Injection(DI) - passing some dependency(functionality) from one part of program to other, so it can perform some actions(example:

mapiterate, but it don’t know what to do) - Inversion of Control(IoC) - control from current part of program handed to other part(

mapcontrols invocation of some logic)- based on that, we can say that we invoke libraries functions and framework invokes our functions

- Dependency Injection(DI) - passing some dependency(functionality) from one part of program to other, so it can perform some actions(example:

- base for

we can say, that any callback is an IIFE, because it is declared in place and invoked, there for there is no need to IIFE to have any closure(it will rely on lexical scope)

- note: it is just one way to represent callbacks

More about Modules

Most classic modules just return object, that represents their public API, but it might be useful to declare some publicApi object, to save reference to public API inside module and only later return it

- problem arrives, because we depend on hoisting here

Asynchronous Module Definition(AMD) - variation to define classic module, presented in RequireJS(library for in-browser/Node module loading) and looks like this: